Microsoft is looking to develop native eye-tracking technologies for a variety of displays. Is this the end of the touchscreen?

The system could forever change the way we interact with technology.

4 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Eye-tracking technology is not entirely new, and the concept has been around for a while now, with Microsoft releasing it on its HoloLens 2 headsets. However, there are tons of other third-party software such as Tobii, that can be integrated into an array of XR/VR/AR headsets.

The Redmond-based tech giant has even released a form of eye-tracking technologies for displays, back in Windows 10, to help those affected with certain conditions (such as ALS, which is a neurodegenerative disease affecting muscles).

However, back then, the technology was not entirely native, as it required a compatible eye tracker, such as the one mentioned earlier.

In a surprising, but expected turn of events, it seems that Microsoft is looking into developing a native eye-tracking technology for displays on Windows, but not limited to it.

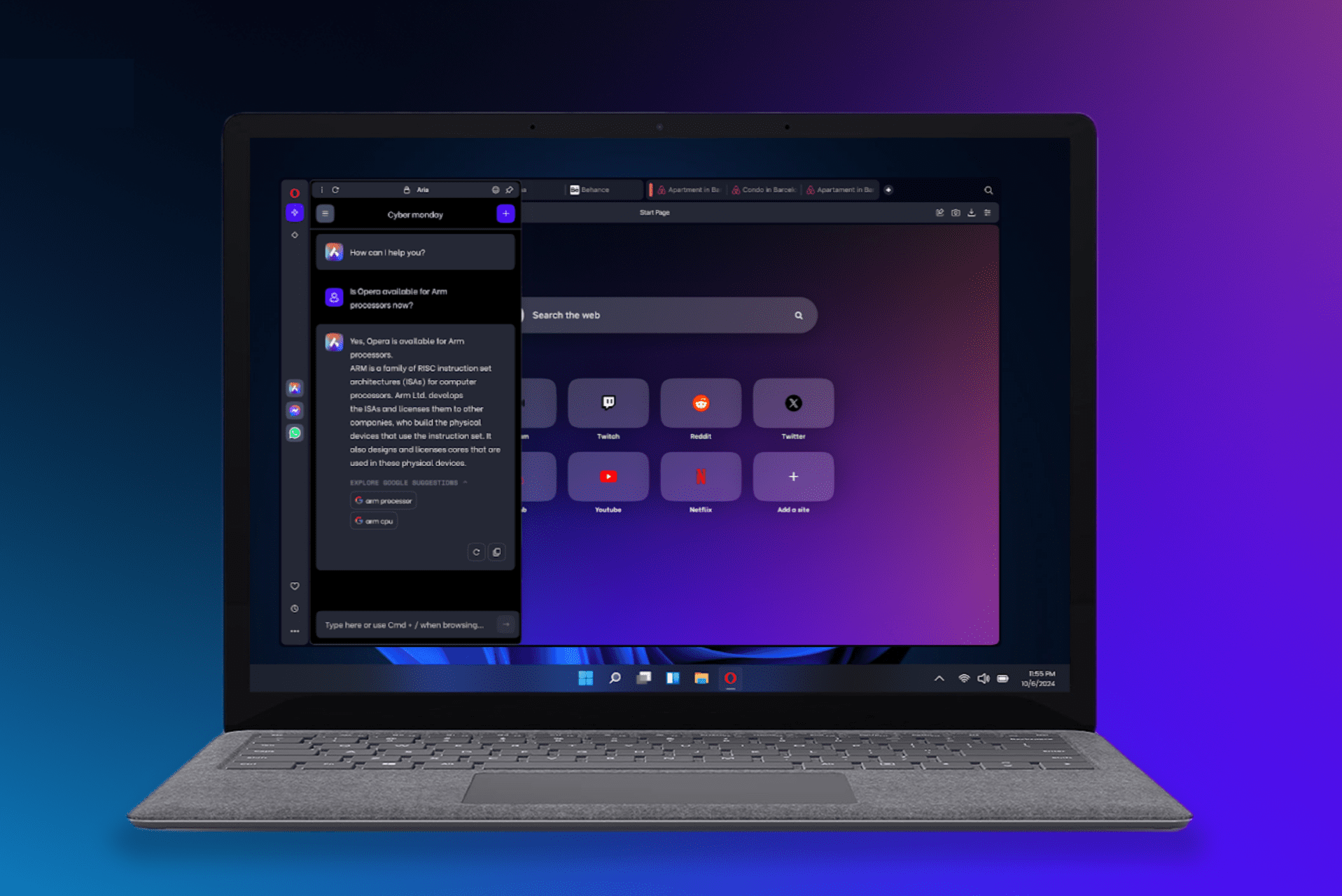

The Redmond-based tech giant recently published a paper describing a system that uses eye-gaze technology to select items on a user interface (UI). The native system would be different from the current eye-tracking technologies.

While it would still use the data of your gaze to let you choose items on a screen with your eyes, the technology comes with a unique characteristic: it lets users customize and configure the selection regions on displays, for exact eye-tracking.

The intelligent UI element selection system disclosed herein provides for automatically configuring or adjusted selection regions of boundaries. Selection regions may be applied to a set of UI elements and selection boundaries may be applied to a individual UI elements.

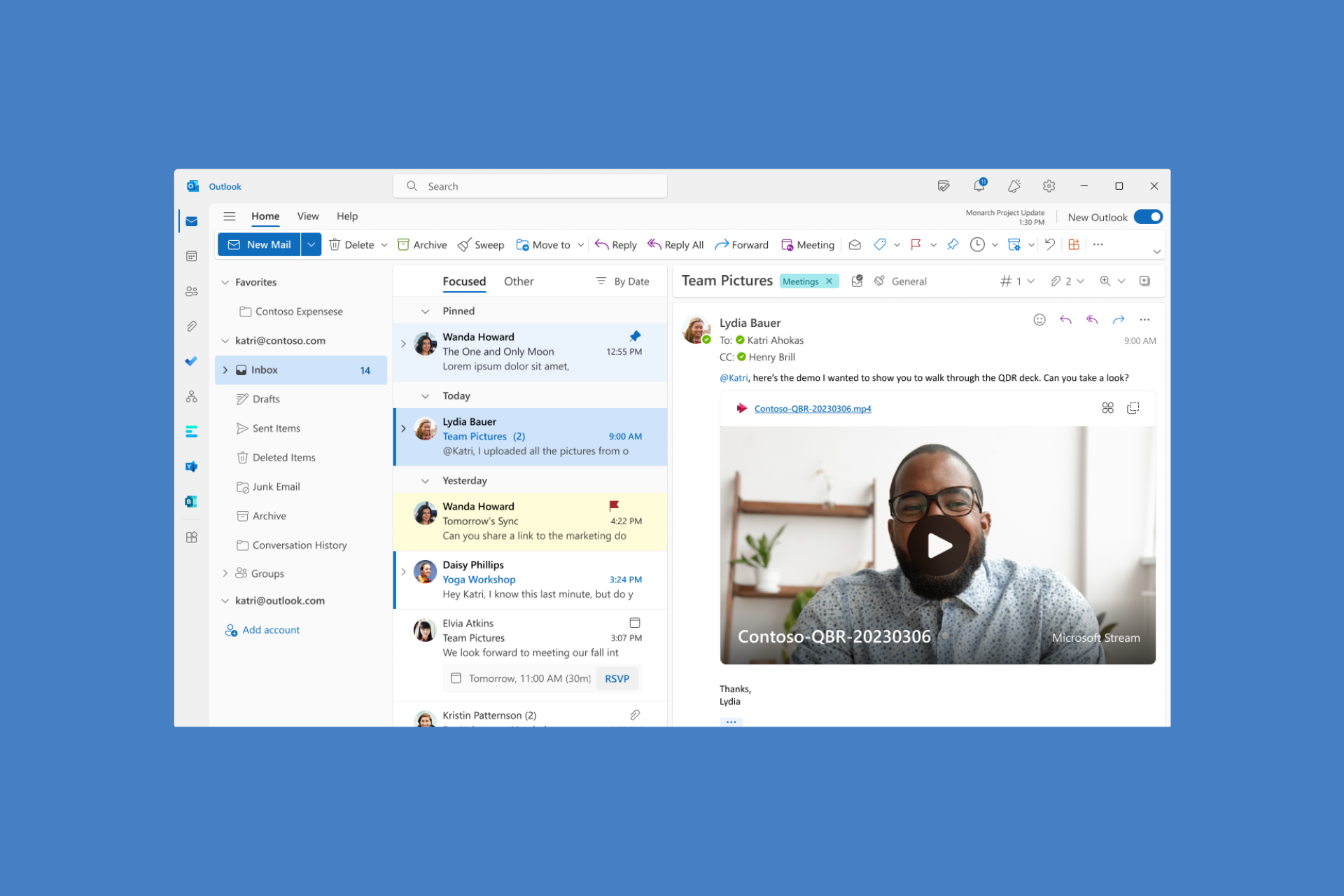

Another interesting thing is that the technology would assign a score to your gaze, as a way to stop it from accessing everything in your way. The system also assigns a score to each item on the current display, whether we’re talking about an application, browser, or the operating system itself.

It then proceeds to compare these scores: the more you look at an item on the screen, the higher the score gets, and if it surpasses the score of that item, then that item will be selected.

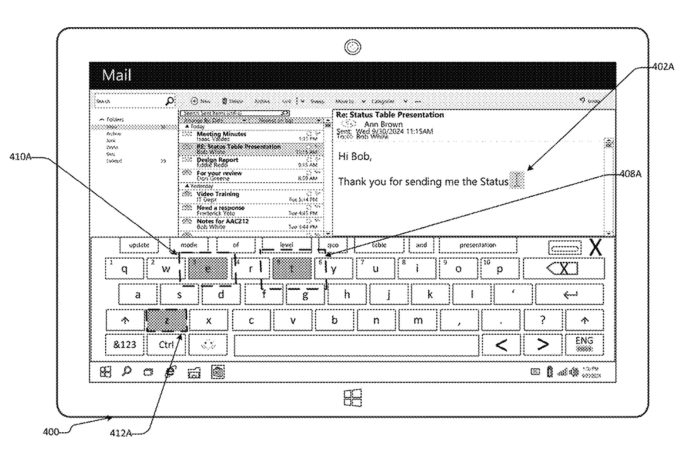

This is not all, though. The technology also has an intelligent UI system that intuitively finds out what you are about to type in with your eyes and emphasizes those regions so that your gaze can be better captured, resulting in a faster and more streamlined experience. Microsoft calls it eye-typing.

If a user is eye-typing a word that starts with ‘q’, then the selection region for the letter ‘u’ may be enlarged, since that letter is statistically more likely to follow the letter ‘q’ than other letters.

Microsoft

The Redmond-based tech giant is developing it to support a variety of applications: users can control audio settings with their gaze, and also look out for radio channels. Through a video interface, it can also be used to control video recordings, or streamings by simply looking at them.

The paper also theorizes that the eye-tracking-based display system can be used to access external memory sticks and storage, and it can used independently and locally: it can run on batteries, and in cases where the system is tied to a network, and the network goes down, all the devices will still be able to run it.

If Microsoft ends up releasing it, and this is most likely to happen, the technology can become the industry’s standard, and could effectively replace the classic touchscreen. And there is a pattern: mobile phones, for instance, have not been using buttons for more than a decade, as touchscreen took over everything.

Then tablets (which are by themselves mini PCs) came around, and the tech industry realized that laptops could have a touchscreen as well.

And while all computers today, including laptops, are released with the classic keyboard, there is a reason for it. Writing on a touchscreen still requires you to use your hands, and on a laptop, the design makes it quite impractical to do so, hence keeping the classic keyboard around.

But eye-typing? If done seamlessly, which this system promises to do, it can be a total game-changer. Sure, the industry will have to find something to keep our hands busy (maybe hand-gazing?), but it could greatly improve productivity.

However, most likely the eye healthcare industry will benefit from this technology, as well.